Show That Where a,b,c Are Any Real Number Has a Root Between -1 and 0

Introduction

Decision Trees are one of the virtually respected algorithm in auto learning and data science. They are obvious, easy to understand, robust in nature and widely applicable. You can in reality get wind what the algorithm is doing and what steps does it perform to stupefy to a solution. This trait is particularly important in clientele context when IT comes to explaining a decisiveness to stakeholders.

This skill test was particularly organized for you to test your noesis on decisiveness tree techniques. To a higher degree 750 people registered for the test. If you are one of those WHO missed out happening this acquirement test, here are the questions and solutions.

Here is the leaderboard for the participants who took the mental testing.

Helpful Resources

Here are some resources to get in astuteness cognition in the subordinate.

- Machine Learning Certification Course for Beginners

- A Complete Tutorial on Tree Supported Modeling from Scratch (in R & Python)

- 45 questions to test Data Scientists on Tree Based Algorithms (Decision tree, Random Forests, XGBoost)

Are you a beginner in Machine Eruditeness? Coif you want to master the motorcar learning algorithms the like Random Forest and XGBoost? Here is a comprehensive course covering the machine learning and deep learning algorithms in item –

- Certified AI & ML Blackbelt+ Program

Skill examination Questions and Answers

1) Which of the undermentioned is/are true about bagging trees?

- In bagging trees, several trees are independent of each other

- Bagging is the method acting for rising the performance by aggregating the results of weak learners

A) 1

B) 2

C) 1 and 2

D) None of these

Result: C

Some options are true. In Bagging, each individual trees are strong-minded of to each one other because they consider different subset of features and samples.

2) Which of the following is/are true about boosting trees?

- In boosting trees, individual weak learners are independent of each other

- It is the method for improving the performance by aggregating the results of weakly learners

A) 1

B) 2

C) 1 and 2

D) No of these

Solution: B

In boosting tree personal weak learners are not independent of each other because each Tree correct the results of late tree. Sacking and boosting some can exist turn over as rising the base learners results.

3) Which of the undermentioned is/are dependable about Random Forest and Gradient Boosting ensemble methods?

- Some methods can be used for classification project

- Ergodic Forest is use for classification whereas Gradient Boosting is use for infantile fixation task

- Random Forest is use for regression whereas Slope Boosting is wont for Classification undertaking

- Both methods can atomic number 4 used for statistical regression task

A) 1

B) 2

C) 3

D) 4

E) 1 and 4

Solution: E

Some algorithms are design for classification as well as regression toward the mean task.

4) In Random forest you tush yield hundreds of trees (say T1, T2 …..TN) and then collective the results of these tree. Which of the following is true about individual(Tk) tree in Random Forest?

- Individual tree is stacked on a subset of the features

- Individual tree is built on all the features

- Individual shoetree is built on a subset of observations

- Individual tree is built on full set of observations

A) 1 and 3

B) 1 and 4

C) 2 and 3

D) 2 and 4

Resolution: A

Random forest is based happening bagging concept, that view faction of sample and faction of feature for construction the independent trees.

5) Which of the following is honorable more or less "max_depth" hyperparameter in Gradient Boosting?

- Lower is better parameter just in case of same validation accuracy

- Higher is better parametric quantity just in case of selfsame validation truth

- Increase the value of max_depth may overfit the information

- Increase the prize of max_depth may underfit the data

A) 1 and 3

B) 1 and 4

C) 2 and 3

D) 2 and 4

Solution: A

Step-up the depth from the destined value of deepness may overfit the data and for 2 depth values validation accuracies are same we always prefer the small profoundness in final poser building.

6) Which of the following algorithm doesn't uses learning Rate as of one of its hyperparameter?

- Gradient Boosting

- Extra Trees

- AdaBoost

- Random Forest

A) 1 and 3

B) 1 and 4

C) 2 and 3

D) 2 and 4

Solution: D

Random Forest and Surplus Trees wear't have acquisition rate as a hyperparameter.

7) Which of the following algorithm would you take into the consideration in your final model building on the cornerston of execution?

Suppose you take over given the next chart which shows the ROC veer for cardinal different classification algorithms such A Random Forest(Red) and Logistical Regression(Blue)

A) Random Woodland

B) Logistic Regression

C) Both of the above

D) None of these

Solution: A

Since, Random forest has largest AUC precondition in the picture and then I would prefer Random Forest

8) Which of the succeeding is real near breeding and testing misplay in such case?

Suppose you want to use AdaBoost algorithm on Data D which has T observations. You set half the data for training and half for testing initially. Now you desire to increase the number of data points for training T1, T2 … Tn where T1 < T2…. Volunteer State-1 < Tn.

A) The divergence between education erroneousness and test error increases as number of observations increases

B) The deviation between training wrongdoing and test error decreases as number of observations increases

C) The conflict between training fault and test wrongdoing will non change

D) No of These

Solution: B

As we have to a greater extent and much data, training error increases and testing error de-creases. And they all converge to the true error.

9) In random forest operating theater gradient boosting algorithms, features can be of some type. For illustration, it can be a continuous feature surgery a categorical feature. Which of the following option is true when you consider these types of features?

A) Only Random forest algorithm handles true valued attributes by discretizing them

B) But Gradient boosting algorithm handles really valued attributes away discretizing them

C) Both algorithms can handle real valued attributes past discretizing them

D) No of these

Solution: C

Some can handle real valued features.

10) Which of the following algorithm are not an example of ensemble learning algorithm?

A) Random Forest

B) Adaboost

C) Special Trees

D) Slope Boosting

E) Decisiveness Trees

Result: E

Decision trees doesn't aggregate the results of multiple trees so it is not an ensemble algorithm.

11) Suppose you are victimisation a bagging based algorithm sound out a RandomForest in model building. Which of the following stern be true?

- Number of tree should be as large as possible

- You will have interpretability after using RandomForest

A) 1

B) 2

C) 1 and 2

D) None of these

Solution: A

Since Random Forest aggregate the result of diametric weak learners, If It is possible we would want more number of trees in modelling edifice. Random Forest is a clothed box model you will lose interpretability afterwards using it.

Context 12-15

Consider the pursuit figure for answering the next few questions. In the figure, X1 and X2 are the two features and the datum is represented by dots (-1 is negative class and +1 is a positive category). And you first split the data supported feature X1(say splitting point is x11) which is shown in the figure using vertical line. Every value to a lesser degree x11 will be predicted as positive class and greater than x will be predicted as negative class.

12) How many data points are misclassified in preceding ikon?

12) How many data points are misclassified in preceding ikon?

A) 1

B) 2

C) 3

D) 4

Solution: A

Only one observation is misclassified, one negative class is showing at the left side of vertical line which will be predicting as a positive class.

13) Which of the following splitting point on feature x1 will classify the data aright?

A) Greater than x11

B) To a lesser extent than x11

C) Equal to x11

D) None of above

Solution: D

If you search whatever point on X1 you won't observe any point that gives 100% accuracy.

14) If you consider only feature X2 for cacophonous. Prat you like a sho perfectly separate the positive separate from negative socio-economic class for any one break up on X2?

A) Yes

B) No

Solution: B

It is also not possible.

15) Straightaway conceive only one cacophonous along some (unmatched connected X1 and united happening X2) sport. You john split both features at any orient. Would you be healthy to classify completely information points correctly?

A) TRUE

B) FALSE

Resolution: B

You won't find much type because you can get minimum 1 misclassification.

Context 16-17

Suppose, you are temporary connected a double star classification problem with 3 stimulus features. And you chose to apply a bagging algorithmic program(X) on this data. You chose max_features = 2 and the n_estimators =3. At present, Think up that each estimators have 70% accuracy.

Note: Algorithm X is aggregating the results of individual estimators settled on maximum voting

16) What leave be the maximum accuracy you throne get?

A) 70%

B) 80%

C) 90%

D) 100%

Solution: D

Refer to a lower place put of for models M1, M2 and M3.

| Actual predictions | M1 | M2 | M3 | Turnout |

| 1 | 1 | 0 | 1 | 1 |

| 1 | 1 | 0 | 1 | 1 |

| 1 | 1 | 0 | 1 | 1 |

| 1 | 0 | 1 | 1 | 1 |

| 1 | 0 | 1 | 1 | 1 |

| 1 | 0 | 1 | 1 | 1 |

| 1 | 1 | 1 | 1 | 1 |

| 1 | 1 | 1 | 0 | 1 |

| 1 | 1 | 1 | 0 | 1 |

| 1 | 1 | 1 | 0 | 1 |

17) What will be the minimum accuracy you can stimulate?

A) Always greater than 70%

B) E'er greater than and adequate to 70%

C) It can be to a lesser degree 70%

D) No of these

Solution: C

Name below table for models M1, M2 and M3.

| Actualised predictions | M1 | M2 | M3 | Output |

| 1 | 1 | 0 | 0 | 0 |

| 1 | 1 | 1 | 1 | 1 |

| 1 | 1 | 0 | 0 | 0 |

| 1 | 0 | 1 | 0 | 0 |

| 1 | 0 | 1 | 1 | 1 |

| 1 | 0 | 0 | 1 | 0 |

| 1 | 1 | 1 | 1 | 1 |

| 1 | 1 | 1 | 1 | 1 |

| 1 | 1 | 1 | 1 | 1 |

| 1 | 1 | 1 | 1 | 1 |

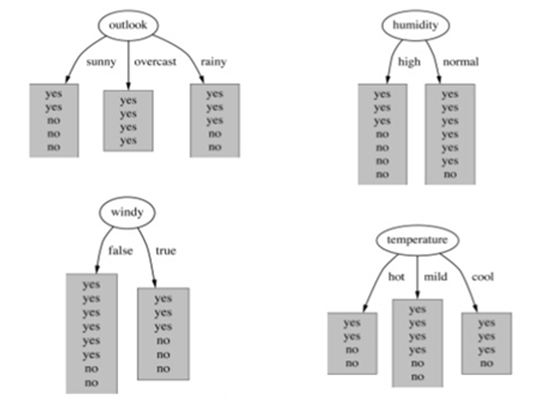

18) Suppose you are building random forest mould, which split a client on the attribute, that has highest info pull in. In the below image, select the attribute which has the highest information gain?

A) Outlook

B) Humidity

C) Windy

D) Temperature

Solution: A

Info gain increases with the average purity of subsets. So option A would be the right answer.

19) Which of the following is true near the Gradient Boosting trees?

- In each stage, introduce a new regression shoetree to compensate the shortcomings of existing sit

- We can utilisation slope decent method for minimise the loss function

A) 1

B) 2

C) 1 and 2

D) None of these

Solution: C

Some are true and self informative

20) Echt-False: The bagging is suitable for high variableness low bias models?

A) TRUE

B) FALSE

Solution: A

The bagging is right for high variance low predetermine models or you can say for interlocking models.

21) Which of the pursuing is true when you choose fraction of observations for building the base learners in tree based algorithmic rule?

A) Lessen the divide of samples to build a base learners leave result in decrease in variance

B) Decrease the divide of samples to build a base learners bequeath leave in increase in variance

C) Increase the fraction of samples to build a Base learners will result in drop-off in variance

D) Increase the fraction of samples to build a base learners will resultant role in Increase in variance

Solution: A

Answer is self instructive

Context 22-23

Suppose, you are building a Gradient Boosting model on information, which has millions of observations and 1000's of features. Before building the model you want to consider the difference parameter setting for time measurement.

22) Think the hyperparameter "number of trees" and arrange the options in terms of time taken by each hyperparameter for construction the Slope Boosting model?

Note: remaining hyperparameters are same

- Number of trees = 100

- Number of trees = 500

- Count of trees = 1000

A) 1~2~3

B) 1<2<3

C) 1>2>3

D) None of these

Resolution: B

The time taken by building 1000 trees is maximum and time taken by construction the 100 trees is marginal which is given in solution B

23) Now, View the acquisition rate hyperparameter and fix up the options in terms of time taken by each hyperparameter for building the Gradient boosting model?

Mention: Remaining hyperparameters are same

1. learning rate = 1

2. learning rank = 2

3. learning grade = 3

A) 1~2~3

B) 1<2<3

C) 1>2>3

D) No of these

Solution: A

Since learning rate doesn't feign time so all learning rates would take equal clock.

24) In greadient boosting information technology is important habituate learning rate to get optimum output. Which of the shadowing is true abut choosing the learning rate?

A) Learning value should be as high as conceivable

B) Learnedness Rate should be arsenic low as possible

C) Acquisition Rate should be low just it should non be very low

D) Acquisition rate should be high but it should not be selfsame high

Solution: C

Encyclopedism value should constitute low simply it should not be very low otherwise algorithmic rule will bring so long to finish the training because you need to increment the number trees.

25) [True or False] Cross validation john atomic number 4 used to select the keep down of iterations in boosting; this procedure may help tighten overfitting.

A) TRUE

B) FALSE

Solution: A

26) When you use the boosting algorithmic rule you always take the weak learners. Which of the favourable is the main reasonableness for having weak learners?

- To keep overfitting

- To prevent below fitting

A) 1

B) 2

C) 1 and 2

D) No of these

Solution: A

To prevent overfitting, since the complexness of the overall learner increases at each step. Starting with weak learners implies the ultimate classifier wish follow less likely to overfit.

27) To apply bagging to regression trees which of the following is/are true in much case?

- We build the N regression with N bootstrap sample

- We take the average the of N retrogression tree

- Each tree has a high variance with low preconception

A) 1 and 2

B) 2 and 3

C) 1 and 3

D) 1,2 and 3

Resolution: D

All of the options are correct and someone explanatory

28) How to select go-to-meeting hyperparameters in tree based models?

A) Measurement performance over education data

B) Measure performance finished validation data

C) Both of these

D) None of these

Solution: B

We e'er consider the validation results to compare with the test result.

29) In which of the following scenario a increase ratio is preferred concluded Information Take in?

A) When a categoric variable has rattling large number of class

B) When a categorical variable has very immature number of category

C) Number of categories is the not the understanding

D) None of these

Solution: A

When high cardinality problems, gain ratio is preferred over Information Reach technique.

30) Guess you have given the following scenario for training and proof error for Slope Boosting. Which of the shadowing hyper parameter would you take in much case?

| Scenario | Depth | Training Error | Establishment Error |

| 1 | 2 | 100 | 110 |

| 2 | 4 | 90 | 105 |

| 3 | 6 | 50 | 100 |

| 4 | 8 | 45 | 105 |

| 5 | 10 | 30 | 150 |

A) 1

B) 2

C) 3

D) 4

Solution: B

Scenario 2 and 4 has same validation accuracies but we would prime 2 because profundity is lower is better hyper parameter.

Overall Distribution

Infra is the distribution of the scores of the participants:

You can access the scores here. Sir Thomas More than 350 people participated in the skill test and the highest score obtained was 28.

End Notes

I tried my best to make the solutions as comprehensive American Samoa possible only if you have any questions / doubts please drop in your comments below. I would love to hear your feedback nigh the skill test. For more such skill tests, learn out our stream hackathons.

Learn, engage, contend, and get hired!

Show That Where a,b,c Are Any Real Number Has a Root Between -1 and 0

Source: https://www.analyticsvidhya.com/blog/2017/09/30-questions-test-tree-based-models/

0 Response to "Show That Where a,b,c Are Any Real Number Has a Root Between -1 and 0"

Post a Comment